Lab Notes

Intent Classification, Named Entity Recognition and Response Generation in Natural Language Processing

What Is Natural Language Processing?

Natural Language Processing (NLP) is an area of computer science and AI concerned with the communication between machines and humans using our own (natural) language. This involves programming computers to allow for the processing of vast amounts of natural language data.

For this piece we will split NLP into two sub-divisions: Natural Language Understanding (NLU) and Natural Language Generation (NLG).

Why Do Machines Need Natural Language Processing?

To put it simply, if we want to be able to communicate with computers using natural language then we need NLP. If you sent a message to the machine saying “Hello”, it’s the NLU component that classifies the intent, which allows the NLG component to produce an appropriate response. Most likely, returning another greeting, “Hello there!”.

Without these fundamental capabilities the machine wouldn’t be able to understand what we are saying. The messages “Hello” or “Bye” would just be a sequence of characters and would differ only in length. NLP helps provide meaning and context to these sequences and allows the AI to formulate the best response.

Natural Language Understanding

NLU is tasked with understanding what the user is trying to say. So, what information are they are attempting to provide, or request. A user’s message is processed and broken down into two parts: the message’s intent, and its entities, if any. For example, if I was chatting with a Horoscope-Bot and I asked the question “What is the horoscope for Aries today?”, the bot should figure out that my intent is something like “get_horoscope” and pick up an entity like “horoscope_sign”: “Aries”. Similarly, if I asked, “What is the horoscope for 28-03”, it should be resolved as the same intent “get_horoscope”, but with different entities “DD”: “28”, “MM”: “03”.

Intent Classification

To decide on the intent of a message a classifier model can be used. An intent classifier simply decides which intent, of a known set of intents, an input belongs to. Models are available which represent each word in the user message as a word embedding. Word embeddings are vector representations of words which means that words are changed into a numeric list so that it is usable by the machine. Similar words are represented by similar vectors and semantic and syntactic aspects of words are captured. To learn more about word–to-vectors check out this paper [1]. All the word embeddings of a message can then be averaged, and a search performed over the set of intents to decide which is most likely the correct option. There are pre-trained models available that have been trained on datasets already, which is useful when starting a project as one might not have a large suite of training data yet. Although, if you have your own dataset for a specific use case then instead of using pre-trained embeddings it is possible to train word embeddings from scratch [2]. This method counts how often distinct words of the training data appear in a message and uses that as input for the classifier. This can be useful for more domain specific bots, with plenty of training data, that require certain acronyms and terms.

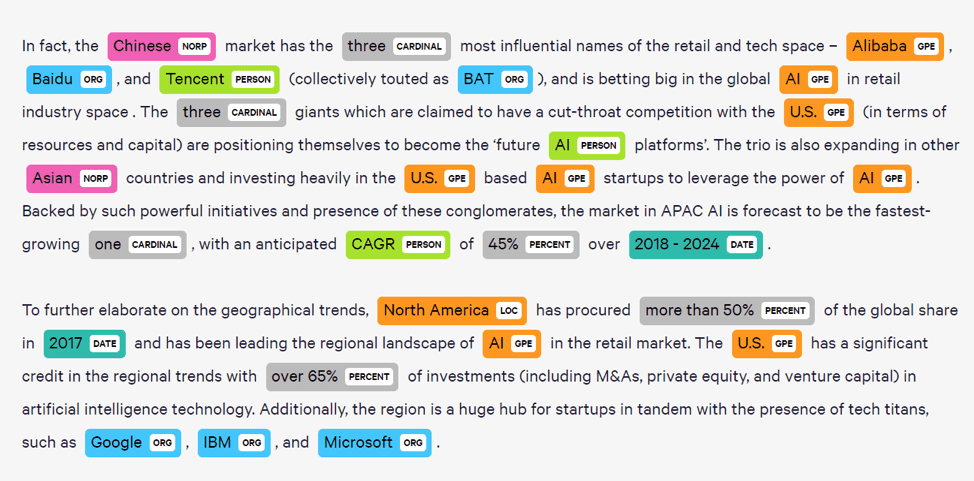

Named Entity Recognition

As we saw in the example above understanding the intent of a message is of no use if you cannot extract the required information such as dates or names. An entity recognition model takes as input a sequence of tokens, such as word embeddings like with the intent classifier, and provides each with a tag, from a set of tags, for each token in the sequence. The most common types of entities used as tags are names, locations, organisations, dates and times, quantities and monetary values. To be able to differentiate entities of the same type that are beside each other in a message the BIO tagging scheme is often used. “B” denotes the beginning of an entity, “I” stands for inside and is used for all words of an entity except the first one, and “O” means that no entity is present. In the following example “PER” means person:

“Shay[B_PER] van[I_PER] Dam[I_PER] and[O] Dave[B_PER] Dineen[I_PER]”

A common problem that NERs run into is that the extraction does not generalise to unseen values of an entity. This can be due to a lack of training data being provided to the model or an over fitting of the model to the data. For both issues the best option is to get more data.

Natural Language Generation

Once the intent and entities of a user’s message is known, it’s time for the machine to respond with its own message. There are many ways that the responses can be generated, and some are better than others for certain tasks, but here we will go through three models.

Retrieval-Based Model

A retrieval-based method of generating responses means that the responses themselves are already pre-defined as templates. When the message intent is deduced an appropriate response is selected from a database of responses, and some variable information may be used from the conversations context, such as the users name, to produce the machines message. Retrieval-based methods are useful for task-oriented conversations where the machine or the user has a certain goal in mind and the dialogue is built around accomplishing it. For example, if a user wants to search for a place to eat with a Restaurant Bot then the information that is required to complete the search won’t differ radically for each search:

Hi, welcome the restaurant finder system. You can search by area, price range or food type. How can I help you?

>>> I want cheap food

Crescent Moon Restaurant serves cheap Chinese food.

>>> Is there anything with French food?

Sorry, there is no French restaurant in the cheap price range.

>>> What about in the expensive price range?

Cultured Cuisine serves French food in the expensive price range.The messages that the machine should be producing here won’t be that different for every user as it will be the same information being requested each time, with slight variations. These conversations can be planned out relatively well. By knowing the task, you can guess what responses the user will give back (within reason, there are always edge cases) and therefore it makes sense to have pre-defined templates for the bot to use.

Sequence-To-Sequence Model

Then there are sequence-to-sequence (seq-2-seq) models [6] where a fixed length input is mapped to a fixed length output, usually differing in size. These models are used in systems such as machine translation [3] and speech recognition [4], but of course has been adapted for conversational user interfaces. The model is made up of three main parts. The encoder converts the natural language input into a machine-readable vector using several recurrent neural networks (RNNs, or LSTM for better performance), where each accepts a single element of the sequence, collects information about it and propagates it forward. For our problem, an input sequence is a collection of all words from the user’s message, like the word embeddings we saw above. The encoder vector is the final state produced by the encoder and aims to encapsulate the data for all input elements so that the decoder can make accurate predictions. The decoder is then another stack of RNNS. Each RNN takes as input the output of the last unit and produces its own output, called a hidden state. A probability vector is created to help decide on the final output.

This type of model uses deep learning methods and is more complex in nature than the retrieval-based method. It allows for the generation of sentences that may not have been seen directly in the training data which makes conversations more flexible and human-like but also more unpredictable if not trained properly. For example, training seq-2-seq on the Cornell movie-dialogues corpus is popular as the dataset is so large [5], and a typical introduction would go as follows:

>>> Hi! How are you?

I am fine. What are you doing for Christmas?This may seem like a funny response, and it is, but given the vast range of movies within the dataset it’s not strange to think there are many Christmas movies that would contribute to this sort of response. This also highlights the sensitivity of the deep learning model to the training data and therefore, the higher risk.

Text Analysis Model

There are also text analysis models available for the extraction of important information from a document, or documents. Here we will focus on a question-answer model based on a given context. So, given a context, a paragraph from an article or an about page of a website, the bot will be able to respond to the user’s question given the answer is in the context. For example, on Maverick’s website, in the about section, it has this paragraph under “Who we Serve”:

We work with a stable of clients based in Ireland, the UK and Northern Europe. They include industrial manufacturers from a variety of sectors including building materials and industrial machines, technology-based companies including enterprise software and IT services, and professional services companies, in particular professional education providers. Our clients tend to be mid-market, some smaller, some larger. Many would be selling complex, highly technical products and services, to disparate groups of business decision makers, over extended buying cycles.

Given the bot has this context and the user asks, “Where are your clients from?”, the response will be:

“ Ireland, the UK and Northern Europe”

As you can see the answer is pulled directly from the paragraph. This form of text analysis can be useful for when FAQ data is not readily available, or annotated correctly, and would not be feasible to create.

Conclusion – Limitations Of Natural Language Processing?

As you can see there are many ways of strapping your computer with advanced AI and NLP capabilities so it can better understand you in your native tongue. Although, communicating through natural language means you inherit the problems that come with it. Chatting with a person through text can be a seamless experience or it can be almost painful, given poor use of language and grammatical mistakes. These same issues will also arise when interacting with an NLP enabled computer and will likely impact our interactions with them.

Of course, the answer to NLP problems is more NLP! Models for spotting and fixing spelling errors are already available and will only get better over time. A big challenge for computers that can chat with you is that most people haven’t interacted with them this way before and will attempt to test, or mess, with them out of curiosity. You can try and design a conversation to the best of your ability but there are always edge cases. You will run into something you never would have thought of once you get people to use it.

References

[1] https://arxiv.org/abs/1301.3781

[2] https://arxiv.org/abs/1709.03856

[3] https://arxiv.org/pdf/1409.3215.pdf

[4] https://www.isca-speech.org/archive/Interspeech_2017/pdfs/0233.PDF

[5] http://www.cs.cornell.edu/~cristian/Cornell_Movie-Dialogs_Corpus.html